sharded-slab-0.1.4/.clog.toml�����������������������������������������������������������������������0000644�0000000�0000000�00000001632�00726746425�0014110�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������[clog]

# A repository link with the trailing '.git' which will be used to generate

# all commit and issue links

repository = "https://github.com/hawkw/sharded-slab"

# A constant release title

# subtitle = "sharded-slab"

# specify the style of commit links to generate, defaults to "github" if omitted

link-style = "github"

# The preferred way to set a constant changelog. This file will be read for old changelog

# data, then prepended to for new changelog data. It's the equivilant to setting

# both infile and outfile to the same file.

#

# Do not use with outfile or infile fields!

#

# Defaults to stdout when omitted

changelog = "CHANGELOG.md"

# This sets the output format. There are two options "json" or "markdown" and

# defaults to "markdown" when omitted

output-format = "markdown"

# If you use tags, you can set the following if you wish to only pick

# up changes since your latest tag

from-latest-tag = true

������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/.github/workflows/ci.yml���������������������������������������������������������0000644�0000000�0000000�00000004101�00726746425�0016716�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������name: CI

on: [push]

jobs:

check:

name: check

runs-on: ubuntu-latest

strategy:

matrix:

rust:

- stable

- nightly

- 1.42.0

steps:

- uses: actions/checkout@master

- name: Install toolchain

uses: actions-rs/toolchain@v1

with:

profile: minimal

toolchain: ${{ matrix.rust }}

override: true

- name: Cargo check

uses: actions-rs/cargo@v1

with:

command: check

args: --all-features

test:

name: Tests

runs-on: ubuntu-latest

needs: check

steps:

- uses: actions/checkout@master

- name: Install toolchain

uses: actions-rs/toolchain@v1

with:

profile: minimal

toolchain: nightly

override: true

- name: Run tests

run: cargo test

test-loom:

name: Loom tests

runs-on: ubuntu-latest

needs: check

steps:

- uses: actions/checkout@master

- name: Install toolchain

uses: actions-rs/toolchain@v1

with:

profile: minimal

toolchain: nightly

override: true

- name: Run Loom tests

run: ./bin/loom.sh

clippy_check:

runs-on: ubuntu-latest

needs: check

steps:

- uses: actions/checkout@v2

- name: Install toolchain

uses: actions-rs/toolchain@v1

with:

profile: minimal

toolchain: stable

components: clippy

override: true

- name: Run clippy

uses: actions-rs/clippy-check@v1

with:

token: ${{ secrets.GITHUB_TOKEN }}

args: --all-features

rustfmt:

name: rustfmt

runs-on: ubuntu-latest

needs: check

steps:

- uses: actions/checkout@v2

- name: Install toolchain

uses: actions-rs/toolchain@v1

with:

profile: minimal

toolchain: stable

components: rustfmt

override: true

- name: Run rustfmt

uses: actions-rs/cargo@v1

with:

command: fmt

args: -- --check

���������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/.github/workflows/release.yml����������������������������������������������������0000644�0000000�0000000�00000001067�00726746425�0017753�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������name: Release

on:

push:

tags:

- v[0-9]+.*

jobs:

create-release:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- uses: taiki-e/create-gh-release-action@v1

with:

# Path to changelog.

changelog: CHANGELOG.md

# Reject releases from commits not contained in branches

# that match the specified pattern (regular expression)

branch: main

env:

# (Required) GitHub token for creating GitHub Releases.

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/.gitignore�����������������������������������������������������������������������0000644�0000000�0000000�00000000036�00726746425�0014176�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������target

Cargo.lock

*.nix

.envrc��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/CHANGELOG.md���������������������������������������������������������������������0000644�0000000�0000000�00000013260�00726746425�0014022�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������

### 0.1.4 (2021-10-12)

#### Features

* emit a nicer panic when thread count overflows `MAX_SHARDS` (#64) ([f1ed058a](https://github.com/hawkw/sharded-slab/commit/f1ed058a3ee296eff033fc0fb88f62a8b2f83f10))

### 0.1.3 (2021-08-02)

#### Bug Fixes

* set up MSRV in CI (#61) ([dfcc9080](https://github.com/hawkw/sharded-slab/commit/dfcc9080a62d08e359f298a9ffb0f275928b83e4), closes [#60](https://github.com/hawkw/sharded-slab/issues/60))

* **tests:** duplicate `hint` mod defs with loom ([0ce3fd91](https://github.com/hawkw/sharded-slab/commit/0ce3fd91feac8b4edb4f1ece6aebfc4ba4e50026))

### 0.1.2 (2021-08-01)

#### Bug Fixes

* make debug assertions drop safe ([26d35a69](https://github.com/hawkw/sharded-slab/commit/26d35a695c9e5d7c62ab07cc5e66a0c6f8b6eade))

#### Features

* improve panics on thread ID bit exhaustion ([9ecb8e61](https://github.com/hawkw/sharded-slab/commit/9ecb8e614f107f68b5c6ba770342ae72af1cd07b))

## 0.1.1 (2021-1-4)

#### Bug Fixes

* change `loom` to an optional dependency ([9bd442b5](https://github.com/hawkw/sharded-slab/commit/9bd442b57bc56153a67d7325144ebcf303e0fe98))

## 0.1.0 (2020-10-20)

#### Bug Fixes

* fix `remove` and `clear` returning true when the key is stale ([b52d38b2](https://github.com/hawkw/sharded-slab/commit/b52d38b2d2d3edc3a59d3dba6b75095bbd864266))

#### Breaking Changes

* **Pool:** change `Pool::create` to return a mutable guard (#48) ([778065ea](https://github.com/hawkw/sharded-slab/commit/778065ead83523e0a9d951fbd19bb37fda3cc280), closes [#41](https://github.com/hawkw/sharded-slab/issues/41), [#16](https://github.com/hawkw/sharded-slab/issues/16))

* **Slab:** rename `Guard` to `Entry` for consistency ([425ad398](https://github.com/hawkw/sharded-slab/commit/425ad39805ee818dc6b332286006bc92c8beab38))

#### Features

* add missing `Debug` impls ([71a8883f](https://github.com/hawkw/sharded-slab/commit/71a8883ff4fd861b95e81840cb5dca167657fe36))

* **Pool:**

* add `Pool::create_owned` and `OwnedRefMut` ([f7774ae0](https://github.com/hawkw/sharded-slab/commit/f7774ae0c5be99340f1e7941bde62f7044f4b4d8))

* add `Arc::get_owned` and `OwnedRef` ([3e566d91](https://github.com/hawkw/sharded-slab/commit/3e566d91e1bc8cc4630a8635ad24b321ec047fe7), closes [#29](https://github.com/hawkw/sharded-slab/issues/29))

* change `Pool::create` to return a mutable guard (#48) ([778065ea](https://github.com/hawkw/sharded-slab/commit/778065ead83523e0a9d951fbd19bb37fda3cc280), closes [#41](https://github.com/hawkw/sharded-slab/issues/41), [#16](https://github.com/hawkw/sharded-slab/issues/16))

* **Slab:**

* add `Arc::get_owned` and `OwnedEntry` ([53a970a2](https://github.com/hawkw/sharded-slab/commit/53a970a2298c30c1afd9578268c79ccd44afba05), closes [#29](https://github.com/hawkw/sharded-slab/issues/29))

* rename `Guard` to `Entry` for consistency ([425ad398](https://github.com/hawkw/sharded-slab/commit/425ad39805ee818dc6b332286006bc92c8beab38))

* add `slab`-style `VacantEntry` API ([6776590a](https://github.com/hawkw/sharded-slab/commit/6776590adeda7bf4a117fb233fc09cfa64d77ced), closes [#16](https://github.com/hawkw/sharded-slab/issues/16))

#### Performance

* allocate shard metadata lazily (#45) ([e543a06d](https://github.com/hawkw/sharded-slab/commit/e543a06d7474b3ff92df2cdb4a4571032135ff8d))

### 0.0.9 (2020-04-03)

#### Features

* **Config:** validate concurrent refs ([9b32af58](9b32af58), closes [#21](21))

* **Pool:**

* add `fmt::Debug` impl for `Pool` ([ffa5c7a0](ffa5c7a0))

* add `Default` impl for `Pool` ([d2399365](d2399365))

* add a sharded object pool for reusing heap allocations (#19) ([89734508](89734508), closes [#2](2), [#15](15))

* **Slab::take:** add exponential backoff when spinning ([6b743a27](6b743a27))

#### Bug Fixes

* incorrect wrapping when overflowing maximum ref count ([aea693f3](aea693f3), closes [#22](22))

### 0.0.8 (2020-01-31)

#### Bug Fixes

* `remove` not adding slots to free lists ([dfdd7aee](dfdd7aee))

### 0.0.7 (2019-12-06)

#### Bug Fixes

* **Config:** compensate for 0 being a valid TID ([b601f5d9](b601f5d9))

* **DefaultConfig:**

* const overflow on 32-bit ([74d42dd1](74d42dd1), closes [#10](10))

* wasted bit patterns on 64-bit ([8cf33f66](8cf33f66))

## 0.0.6 (2019-11-08)

#### Features

* **Guard:** expose `key` method #8 ([748bf39b](748bf39b))

## 0.0.5 (2019-10-31)

#### Performance

* consolidate per-slot state into one AtomicUsize (#6) ([f1146d33](f1146d33))

#### Features

* add Default impl for Slab ([61bb3316](61bb3316))

## 0.0.4 (2019-21-30)

#### Features

* prevent items from being removed while concurrently accessed ([872c81d1](872c81d1))

* added `Slab::remove` method that marks an item to be removed when the last thread

accessing it finishes ([872c81d1](872c81d1))

#### Bug Fixes

* nicer handling of races in remove ([475d9a06](475d9a06))

#### Breaking Changes

* renamed `Slab::remove` to `Slab::take` ([872c81d1](872c81d1))

* `Slab::get` now returns a `Guard` type ([872c81d1](872c81d1))

## 0.0.3 (2019-07-30)

#### Bug Fixes

* split local/remote to fix false sharing & potential races ([69f95fb0](69f95fb0))

* set next pointer _before_ head ([cc7a0bf1](cc7a0bf1))

#### Breaking Changes

* removed potentially racy `Slab::len` and `Slab::capacity` methods ([27af7d6c](27af7d6c))

## 0.0.2 (2019-03-30)

#### Bug Fixes

* fix compilation failure in release mode ([617031da](617031da))

## 0.0.1 (2019-02-30)

- Initial release

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/Cargo.toml�����������������������������������������������������������������������0000644�����������������00000002671�00000000001�0011373�0����������������������������������������������������������������������������������������������������ustar ��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������# THIS FILE IS AUTOMATICALLY GENERATED BY CARGO

#

# When uploading crates to the registry Cargo will automatically

# "normalize" Cargo.toml files for maximal compatibility

# with all versions of Cargo and also rewrite `path` dependencies

# to registry (e.g., crates.io) dependencies.

#

# If you are reading this file be aware that the original Cargo.toml

# will likely look very different (and much more reasonable).

# See Cargo.toml.orig for the original contents.

[package]

edition = "2018"

name = "sharded-slab"

version = "0.1.4"

authors = ["Eliza Weisman "]

description = "A lock-free concurrent slab.\n"

homepage = "https://github.com/hawkw/sharded-slab"

documentation = "https://docs.rs/sharded-slab/0.1.4/sharded_slab"

readme = "README.md"

keywords = ["slab", "allocator", "lock-free", "atomic"]

categories = ["memory-management", "data-structures", "concurrency"]

license = "MIT"

repository = "https://github.com/hawkw/sharded-slab"

[package.metadata.docs.rs]

all-features = true

rustdoc-args = ["--cfg", "docsrs"]

[[bench]]

name = "bench"

harness = false

[dependencies.lazy_static]

version = "1"

[dev-dependencies.criterion]

version = "0.3"

[dev-dependencies.loom]

version = "0.5"

features = ["checkpoint"]

[dev-dependencies.proptest]

version = "1"

[dev-dependencies.slab]

version = "0.4.2"

[target."cfg(loom)".dependencies.loom]

version = "0.5"

features = ["checkpoint"]

optional = true

[badges.maintenance]

status = "experimental"

�����������������������������������������������������������������������sharded-slab-0.1.4/Cargo.toml.orig������������������������������������������������������������������0000644�0000000�0000000�00000001647�00726746425�0015106�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������[package]

name = "sharded-slab"

version = "0.1.4"

authors = ["Eliza Weisman "]

edition = "2018"

documentation = "https://docs.rs/sharded-slab/0.1.4/sharded_slab"

homepage = "https://github.com/hawkw/sharded-slab"

repository = "https://github.com/hawkw/sharded-slab"

readme = "README.md"

license = "MIT"

keywords = ["slab", "allocator", "lock-free", "atomic"]

categories = ["memory-management", "data-structures", "concurrency"]

description = """

A lock-free concurrent slab.

"""

[badges]

maintenance = { status = "experimental" }

[[bench]]

name = "bench"

harness = false

[dependencies]

lazy_static = "1"

[dev-dependencies]

loom = { version = "0.5", features = ["checkpoint"] }

proptest = "1"

criterion = "0.3"

slab = "0.4.2"

[target.'cfg(loom)'.dependencies]

loom = { version = "0.5", features = ["checkpoint"], optional = true }

[package.metadata.docs.rs]

all-features = true

rustdoc-args = ["--cfg", "docsrs"]�����������������������������������������������������������������������������������������sharded-slab-0.1.4/IMPLEMENTATION.md����������������������������������������������������������������0000644�0000000�0000000�00000016672�00726746425�0014672�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������Notes on `sharded-slab`'s implementation and design.

# Design

The sharded slab's design is strongly inspired by the ideas presented by

Leijen, Zorn, and de Moura in [Mimalloc: Free List Sharding in

Action][mimalloc]. In this report, the authors present a novel design for a

memory allocator based on a concept of _free list sharding_.

Memory allocators must keep track of what memory regions are not currently

allocated ("free") in order to provide them to future allocation requests.

The term [_free list_][freelist] refers to a technique for performing this

bookkeeping, where each free block stores a pointer to the next free block,

forming a linked list. The memory allocator keeps a pointer to the most

recently freed block, the _head_ of the free list. To allocate more memory,

the allocator pops from the free list by setting the head pointer to the

next free block of the current head block, and returning the previous head.

To deallocate a block, the block is pushed to the free list by setting its

first word to the current head pointer, and the head pointer is set to point

to the deallocated block. Most implementations of slab allocators backed by

arrays or vectors use a similar technique, where pointers are replaced by

indices into the backing array.

When allocations and deallocations can occur concurrently across threads,

they must synchronize accesses to the free list; either by putting the

entire allocator state inside of a lock, or by using atomic operations to

treat the free list as a lock-free structure (such as a [Treiber stack]). In

both cases, there is a significant performance cost — even when the free

list is lock-free, it is likely that a noticeable amount of time will be

spent in compare-and-swap loops. Ideally, the global synchronzation point

created by the single global free list could be avoided as much as possible.

The approach presented by Leijen, Zorn, and de Moura is to introduce

sharding and thus increase the granularity of synchronization significantly.

In mimalloc, the heap is _sharded_ so that each thread has its own

thread-local heap. Objects are always allocated from the local heap of the

thread where the allocation is performed. Because allocations are always

done from a thread's local heap, they need not be synchronized.

However, since objects can move between threads before being deallocated,

_deallocations_ may still occur concurrently. Therefore, Leijen et al.

introduce a concept of _local_ and _global_ free lists. When an object is

deallocated on the same thread it was originally allocated on, it is placed

on the local free list; if it is deallocated on another thread, it goes on

the global free list for the heap of the thread from which it originated. To

allocate, the local free list is used first; if it is empty, the entire

global free list is popped onto the local free list. Since the local free

list is only ever accessed by the thread it belongs to, it does not require

synchronization at all, and because the global free list is popped from

infrequently, the cost of synchronization has a reduced impact. A majority

of allocations can occur without any synchronization at all; and

deallocations only require synchronization when an object has left its

parent thread (a relatively uncommon case).

[mimalloc]: https://www.microsoft.com/en-us/research/uploads/prod/2019/06/mimalloc-tr-v1.pdf

[freelist]: https://en.wikipedia.org/wiki/Free_list

[Treiber stack]: https://en.wikipedia.org/wiki/Treiber_stack

# Implementation

A slab is represented as an array of [`MAX_THREADS`] _shards_. A shard

consists of a vector of one or more _pages_ plus associated metadata.

Finally, a page consists of an array of _slots_, head indices for the local

and remote free lists.

```text

┌─────────────┐

│ shard 1 │

│ │ ┌─────────────┐ ┌────────┐

│ pages───────┼───▶│ page 1 │ │ │

├─────────────┤ ├─────────────┤ ┌────▶│ next──┼─┐

│ shard 2 │ │ page 2 │ │ ├────────┤ │

├─────────────┤ │ │ │ │XXXXXXXX│ │

│ shard 3 │ │ local_head──┼──┘ ├────────┤ │

└─────────────┘ │ remote_head─┼──┐ │ │◀┘

... ├─────────────┤ │ │ next──┼─┐

┌─────────────┐ │ page 3 │ │ ├────────┤ │

│ shard n │ └─────────────┘ │ │XXXXXXXX│ │

└─────────────┘ ... │ ├────────┤ │

┌─────────────┐ │ │XXXXXXXX│ │

│ page n │ │ ├────────┤ │

└─────────────┘ │ │ │◀┘

└────▶│ next──┼───▶ ...

├────────┤

│XXXXXXXX│

└────────┘

```

The size of the first page in a shard is always a power of two, and every

subsequent page added after the first is twice as large as the page that

preceeds it.

```text

pg.

┌───┐ ┌─┬─┐

│ 0 │───▶ │ │

├───┤ ├─┼─┼─┬─┐

│ 1 │───▶ │ │ │ │

├───┤ ├─┼─┼─┼─┼─┬─┬─┬─┐

│ 2 │───▶ │ │ │ │ │ │ │ │

├───┤ ├─┼─┼─┼─┼─┼─┼─┼─┼─┬─┬─┬─┬─┬─┬─┬─┐

│ 3 │───▶ │ │ │ │ │ │ │ │ │ │ │ │ │ │ │ │

└───┘ └─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┘

```

When searching for a free slot, the smallest page is searched first, and if

it is full, the search proceeds to the next page until either a free slot is

found or all available pages have been searched. If all available pages have

been searched and the maximum number of pages has not yet been reached, a

new page is then allocated.

Since every page is twice as large as the previous page, and all page sizes

are powers of two, we can determine the page index that contains a given

address by shifting the address down by the smallest page size and

looking at how many twos places necessary to represent that number,

telling us what power of two page size it fits inside of. We can

determine the number of twos places by counting the number of leading

zeros (unused twos places) in the number's binary representation, and

subtracting that count from the total number of bits in a word.

The formula for determining the page number that contains an offset is thus:

```rust,ignore

WIDTH - ((offset + INITIAL_PAGE_SIZE) >> INDEX_SHIFT).leading_zeros()

```

where `WIDTH` is the number of bits in a `usize`, and `INDEX_SHIFT` is

```rust,ignore

INITIAL_PAGE_SIZE.trailing_zeros() + 1;

```

[`MAX_THREADS`]: https://docs.rs/sharded-slab/latest/sharded_slab/trait.Config.html#associatedconstant.MAX_THREADS

����������������������������������������������������������������������sharded-slab-0.1.4/LICENSE��������������������������������������������������������������������������0000644�0000000�0000000�00000002041�00726746425�0013211�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������Copyright (c) 2019 Eliza Weisman

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in

all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN

THE SOFTWARE.

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/README.md������������������������������������������������������������������������0000644�0000000�0000000�00000022052�00726746425�0013467�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������# sharded-slab

A lock-free concurrent slab.

[![Crates.io][crates-badge]][crates-url]

[![Documentation][docs-badge]][docs-url]

[![CI Status][ci-badge]][ci-url]

[![GitHub License][license-badge]][license]

![maintenance status][maint-badge]

[crates-badge]: https://img.shields.io/crates/v/sharded-slab.svg

[crates-url]: https://crates.io/crates/sharded-slab

[docs-badge]: https://docs.rs/sharded-slab/badge.svg

[docs-url]: https://docs.rs/sharded-slab/0.1.4/sharded_slab

[ci-badge]: https://github.com/hawkw/sharded-slab/workflows/CI/badge.svg

[ci-url]: https://github.com/hawkw/sharded-slab/actions?workflow=CI

[license-badge]: https://img.shields.io/crates/l/sharded-slab

[license]: LICENSE

[maint-badge]: https://img.shields.io/badge/maintenance-experimental-blue.svg

Slabs provide pre-allocated storage for many instances of a single data

type. When a large number of values of a single type are required,

this can be more efficient than allocating each item individually. Since the

allocated items are the same size, memory fragmentation is reduced, and

creating and removing new items can be very cheap.

This crate implements a lock-free concurrent slab, indexed by `usize`s.

**Note**: This crate is currently experimental. Please feel free to use it in

your projects, but bear in mind that there's still plenty of room for

optimization, and there may still be some lurking bugs.

## Usage

First, add this to your `Cargo.toml`:

```toml

sharded-slab = "0.1.1"

```

This crate provides two types, [`Slab`] and [`Pool`], which provide slightly

different APIs for using a sharded slab.

[`Slab`] implements a slab for _storing_ small types, sharing them between

threads, and accessing them by index. New entries are allocated by [inserting]

data, moving it in by value. Similarly, entries may be deallocated by [taking]

from the slab, moving the value out. This API is similar to a `Vec>`,

but allowing lock-free concurrent insertion and removal.

In contrast, the [`Pool`] type provides an [object pool] style API for

_reusing storage_. Rather than constructing values and moving them into

the pool, as with [`Slab`], [allocating an entry][create] from the pool

takes a closure that's provided with a mutable reference to initialize

the entry in place. When entries are deallocated, they are [cleared] in

place. Types which own a heap allocation can be cleared by dropping any

_data_ they store, but retaining any previously-allocated capacity. This

means that a [`Pool`] may be used to reuse a set of existing heap

allocations, reducing allocator load.

[`Slab`]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Slab.html

[inserting]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Slab.html#method.insert

[taking]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Slab.html#method.take

[`Pool`]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Pool.html

[create]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/struct.Pool.html#method.create

[cleared]: https://docs.rs/sharded-slab/0.1.4/sharded_slab/trait.Clear.html

[object pool]: https://en.wikipedia.org/wiki/Object_pool_pattern

### Examples

Inserting an item into the slab, returning an index:

```rust

use sharded_slab::Slab;

let slab = Slab::new();

let key = slab.insert("hello world").unwrap();

assert_eq!(slab.get(key).unwrap(), "hello world");

```

To share a slab across threads, it may be wrapped in an `Arc`:

```rust

use sharded_slab::Slab;

use std::sync::Arc;

let slab = Arc::new(Slab::new());

let slab2 = slab.clone();

let thread2 = std::thread::spawn(move || {

let key = slab2.insert("hello from thread two").unwrap();

assert_eq!(slab2.get(key).unwrap(), "hello from thread two");

key

});

let key1 = slab.insert("hello from thread one").unwrap();

assert_eq!(slab.get(key1).unwrap(), "hello from thread one");

// Wait for thread 2 to complete.

let key2 = thread2.join().unwrap();

// The item inserted by thread 2 remains in the slab.

assert_eq!(slab.get(key2).unwrap(), "hello from thread two");

```

If items in the slab must be mutated, a `Mutex` or `RwLock` may be used for

each item, providing granular locking of items rather than of the slab:

```rust

use sharded_slab::Slab;

use std::sync::{Arc, Mutex};

let slab = Arc::new(Slab::new());

let key = slab.insert(Mutex::new(String::from("hello world"))).unwrap();

let slab2 = slab.clone();

let thread2 = std::thread::spawn(move || {

let hello = slab2.get(key).expect("item missing");

let mut hello = hello.lock().expect("mutex poisoned");

*hello = String::from("hello everyone!");

});

thread2.join().unwrap();

let hello = slab.get(key).expect("item missing");

let mut hello = hello.lock().expect("mutex poisoned");

assert_eq!(hello.as_str(), "hello everyone!");

```

## Comparison with Similar Crates

- [`slab`]: Carl Lerche's `slab` crate provides a slab implementation with a

similar API, implemented by storing all data in a single vector.

Unlike `sharded-slab`, inserting and removing elements from the slab requires

mutable access. This means that if the slab is accessed concurrently by

multiple threads, it is necessary for it to be protected by a `Mutex` or

`RwLock`. Items may not be inserted or removed (or accessed, if a `Mutex` is

used) concurrently, even when they are unrelated. In many cases, the lock can

become a significant bottleneck. On the other hand, `sharded-slab` allows

separate indices in the slab to be accessed, inserted, and removed

concurrently without requiring a global lock. Therefore, when the slab is

shared across multiple threads, this crate offers significantly better

performance than `slab`.

However, the lock free slab introduces some additional constant-factor

overhead. This means that in use-cases where a slab is _not_ shared by

multiple threads and locking is not required, `sharded-slab` will likely

offer slightly worse performance.

In summary: `sharded-slab` offers significantly improved performance in

concurrent use-cases, while `slab` should be preferred in single-threaded

use-cases.

[`slab`]: https://crates.io/crates/slab

## Safety and Correctness

Most implementations of lock-free data structures in Rust require some

amount of unsafe code, and this crate is not an exception. In order to catch

potential bugs in this unsafe code, we make use of [`loom`], a

permutation-testing tool for concurrent Rust programs. All `unsafe` blocks

this crate occur in accesses to `loom` `UnsafeCell`s. This means that when

those accesses occur in this crate's tests, `loom` will assert that they are

valid under the C11 memory model across multiple permutations of concurrent

executions of those tests.

In order to guard against the [ABA problem][aba], this crate makes use of

_generational indices_. Each slot in the slab tracks a generation counter

which is incremented every time a value is inserted into that slot, and the

indices returned by `Slab::insert` include the generation of the slot when

the value was inserted, packed into the high-order bits of the index. This

ensures that if a value is inserted, removed, and a new value is inserted

into the same slot in the slab, the key returned by the first call to

`insert` will not map to the new value.

Since a fixed number of bits are set aside to use for storing the generation

counter, the counter will wrap around after being incremented a number of

times. To avoid situations where a returned index lives long enough to see the

generation counter wrap around to the same value, it is good to be fairly

generous when configuring the allocation of index bits.

[`loom`]: https://crates.io/crates/loom

[aba]: https://en.wikipedia.org/wiki/ABA_problem

## Performance

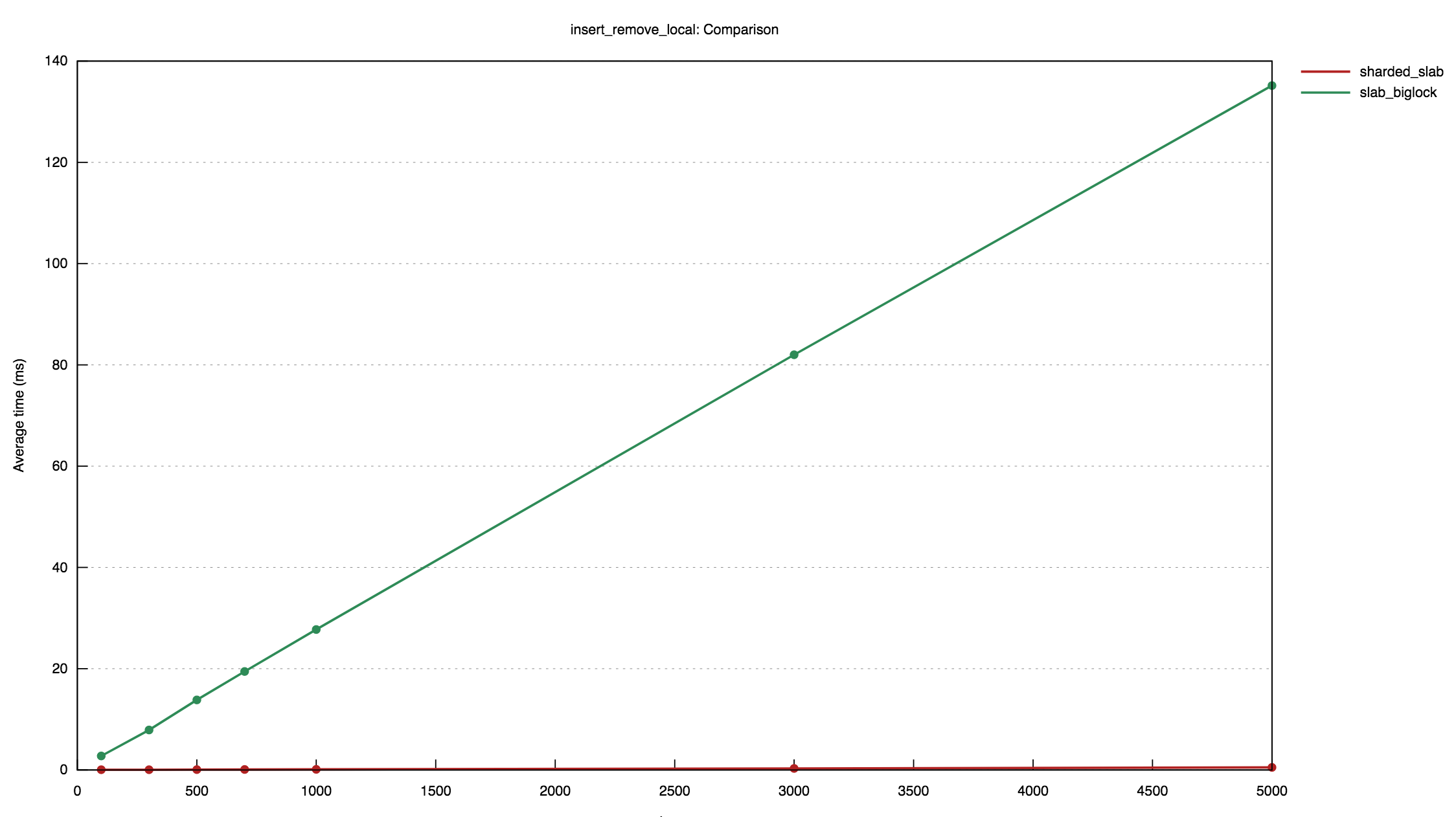

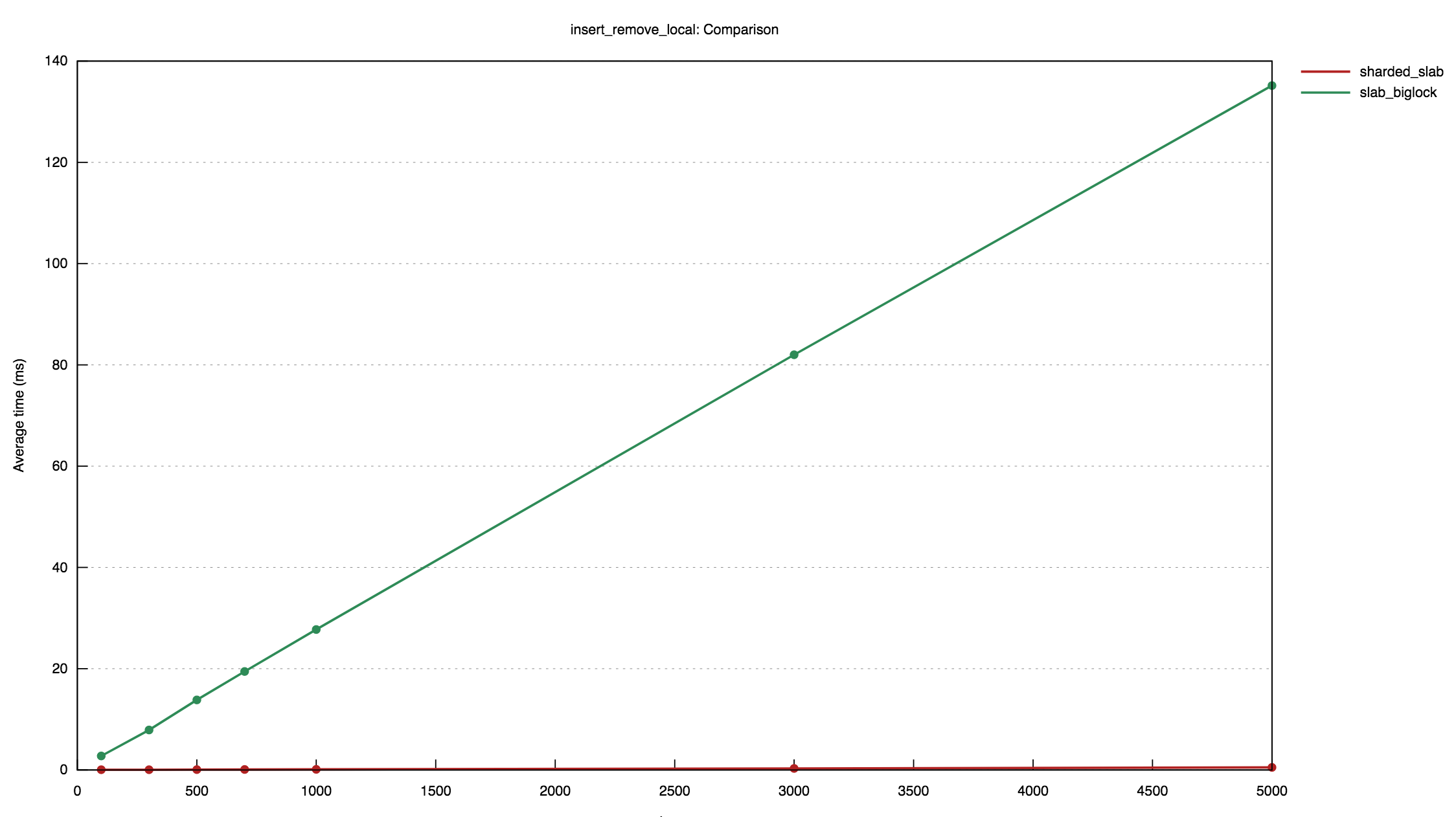

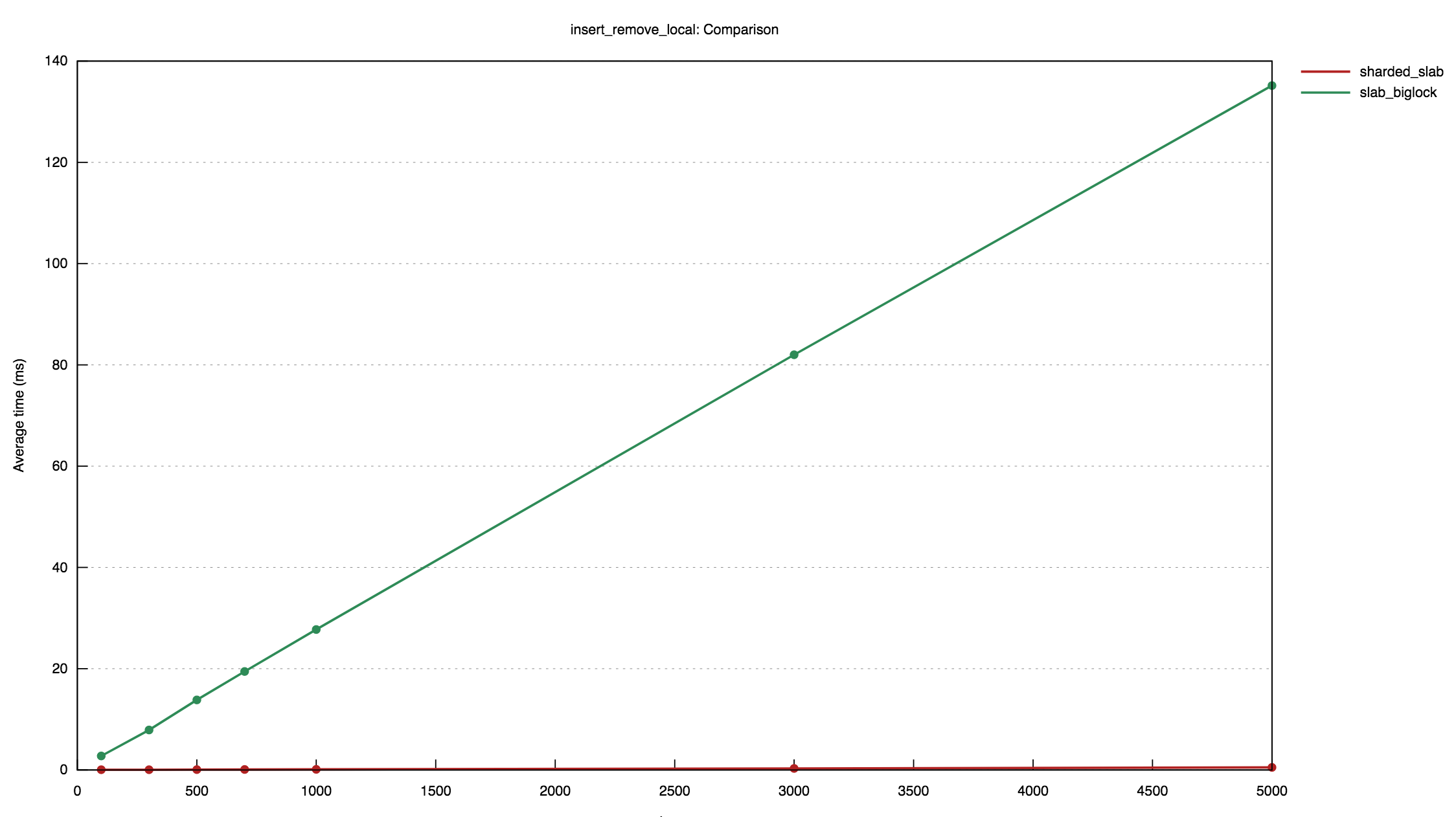

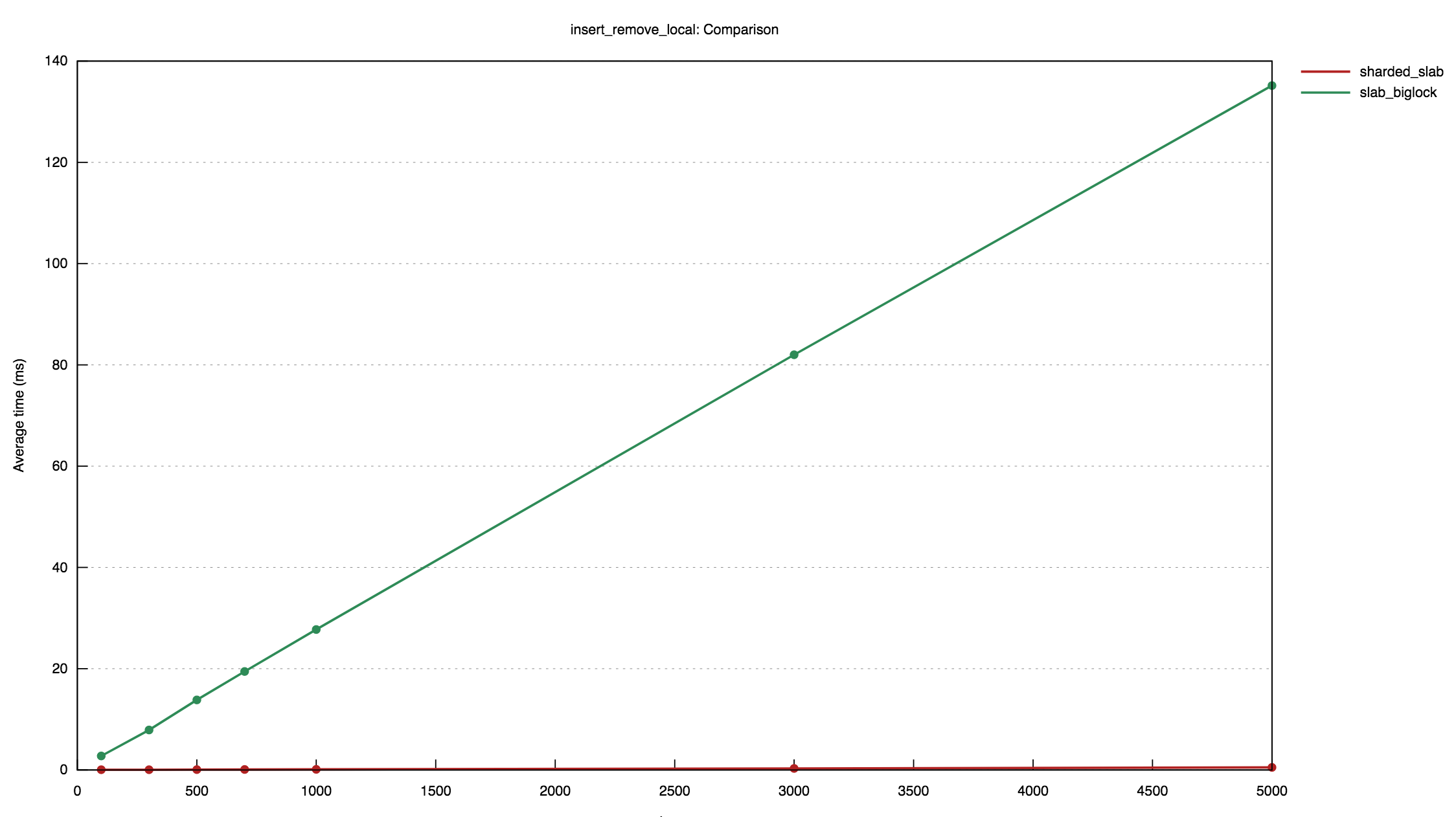

These graphs were produced by [benchmarks] of the sharded slab implementation,

using the [`criterion`] crate.

The first shows the results of a benchmark where an increasing number of

items are inserted and then removed into a slab concurrently by five

threads. It compares the performance of the sharded slab implementation

with a `RwLock`:

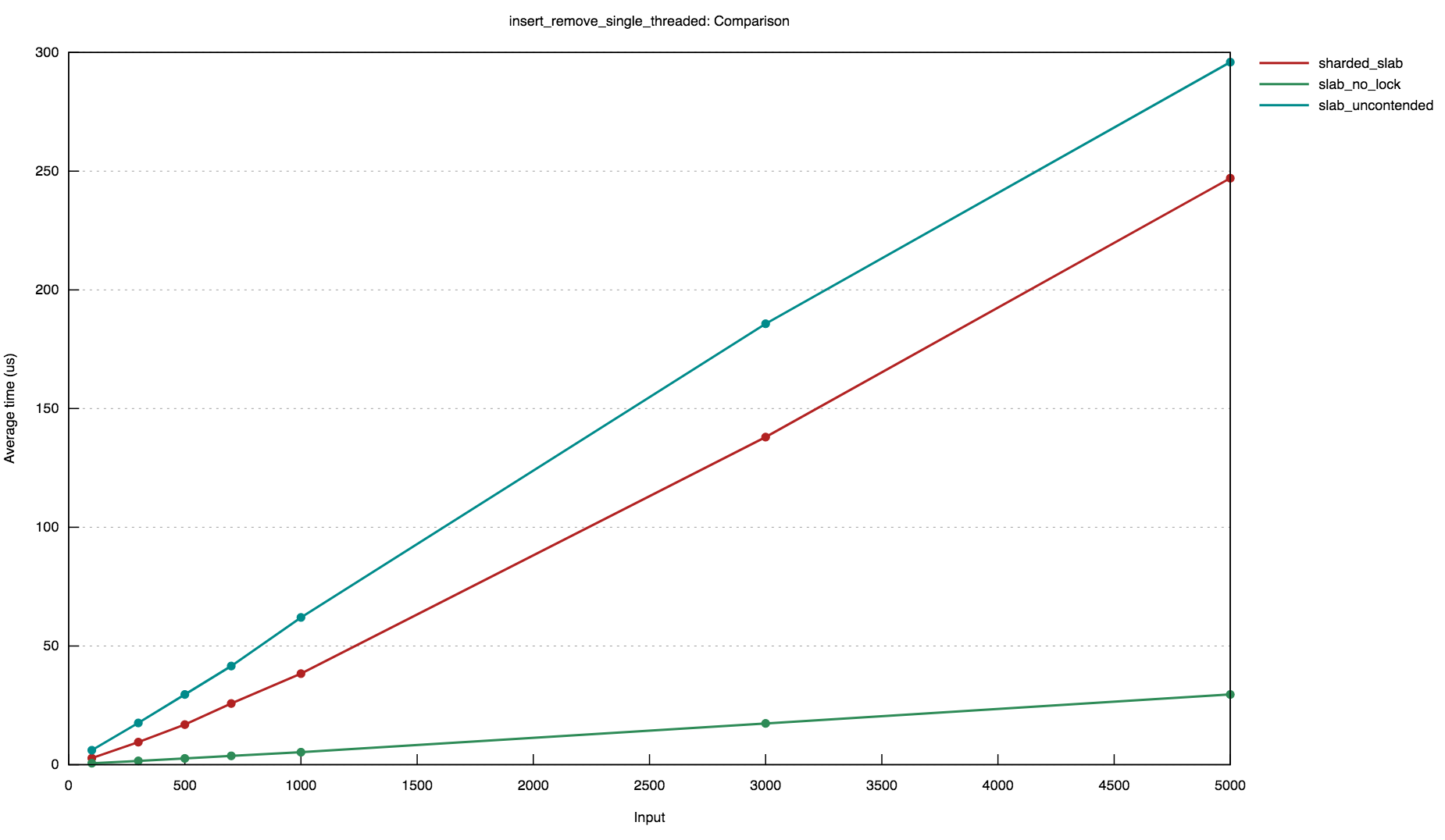

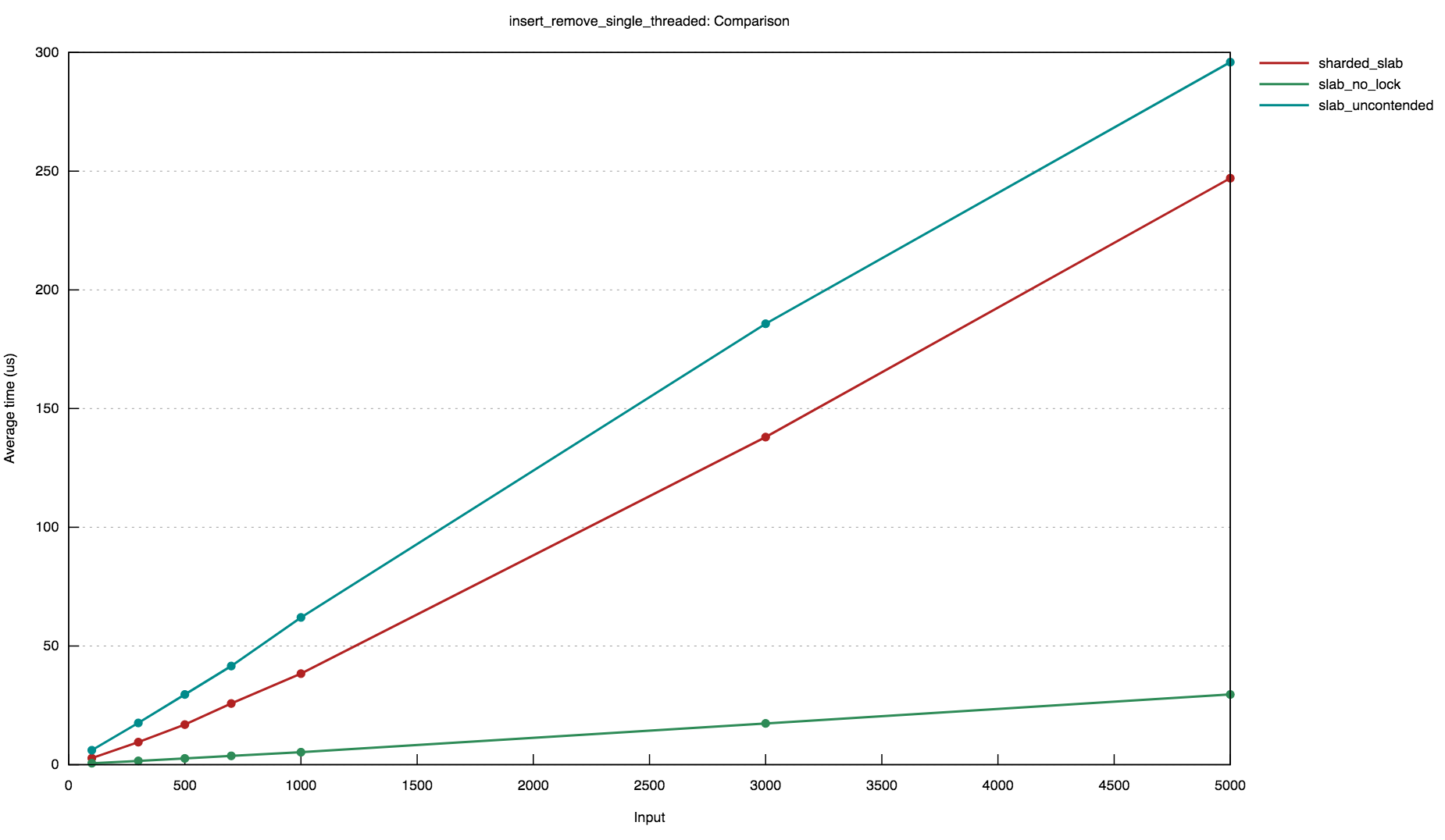

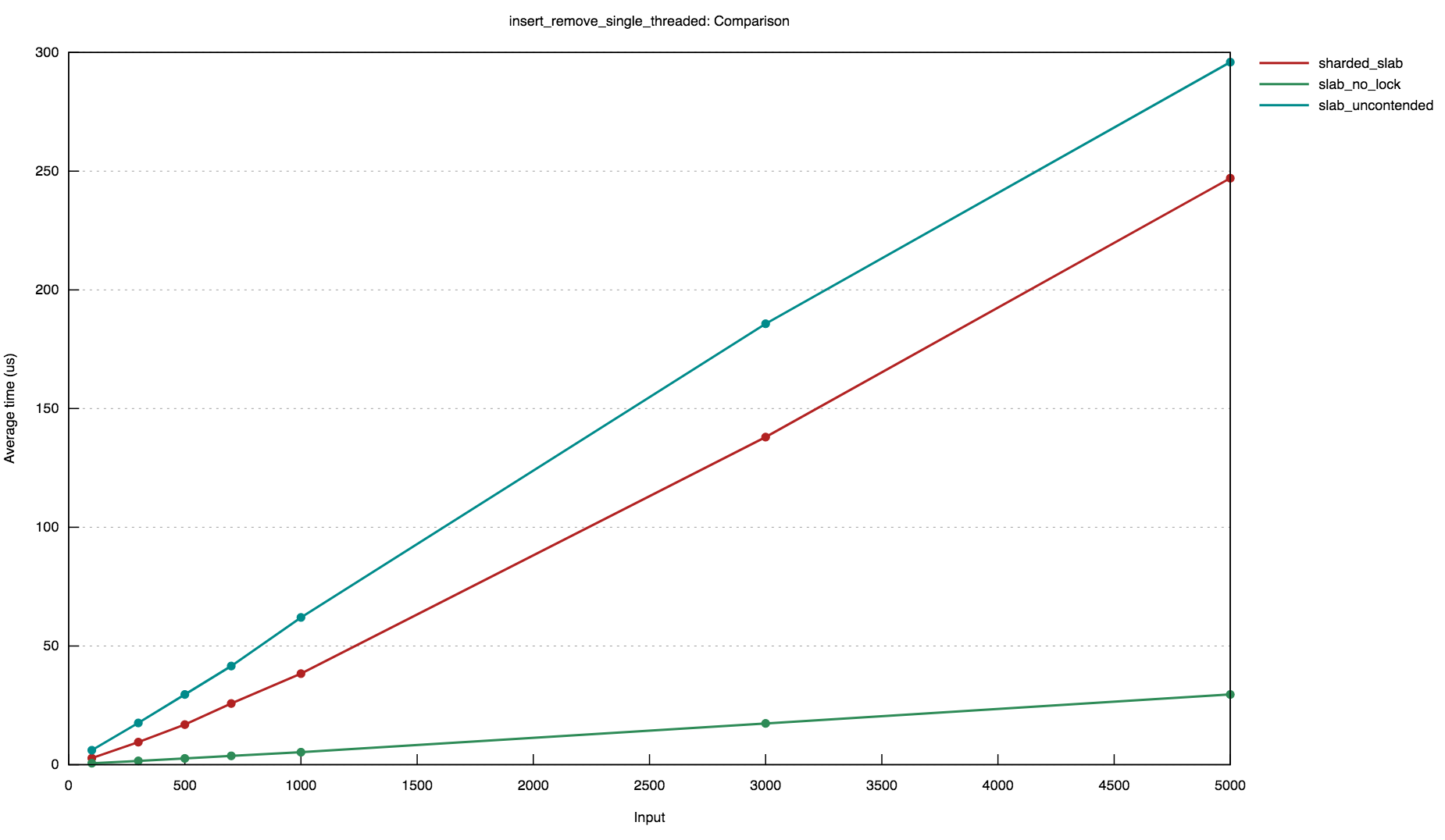

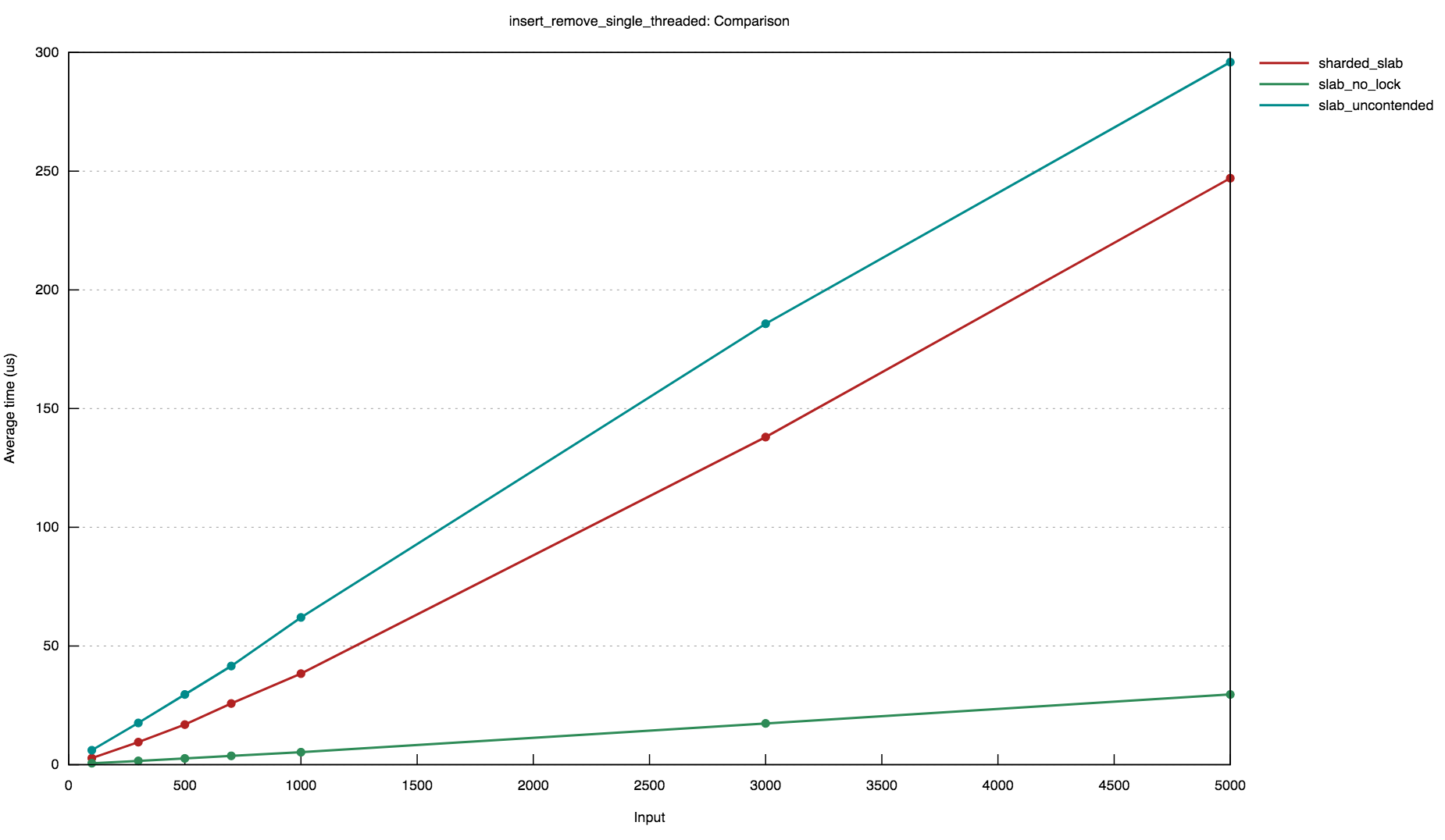

The second graph shows the results of a benchmark where an increasing

number of items are inserted and then removed by a _single_ thread. It

compares the performance of the sharded slab implementation with an

`RwLock` and a `mut slab::Slab`.

The second graph shows the results of a benchmark where an increasing

number of items are inserted and then removed by a _single_ thread. It

compares the performance of the sharded slab implementation with an

`RwLock` and a `mut slab::Slab`.

These benchmarks demonstrate that, while the sharded approach introduces

a small constant-factor overhead, it offers significantly better

performance across concurrent accesses.

[benchmarks]: https://github.com/hawkw/sharded-slab/blob/master/benches/bench.rs

[`criterion`]: https://crates.io/crates/criterion

## License

This project is licensed under the [MIT license](LICENSE).

### Contribution

Unless you explicitly state otherwise, any contribution intentionally submitted

for inclusion in this project by you, shall be licensed as MIT, without any

additional terms or conditions.

��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/benches/bench.rs�����������������������������������������������������������������0000644�0000000�0000000�00000015456�00726746425�0015256�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������use criterion::{criterion_group, criterion_main, BenchmarkId, Criterion};

use std::{

sync::{Arc, Barrier, RwLock},

thread,

time::{Duration, Instant},

};

#[derive(Clone)]

struct MultithreadedBench {

start: Arc,

end: Arc,

slab: Arc,

}

impl MultithreadedBench {

fn new(slab: Arc) -> Self {

Self {

start: Arc::new(Barrier::new(5)),

end: Arc::new(Barrier::new(5)),

slab,

}

}

fn thread(&self, f: impl FnOnce(&Barrier, &T) + Send + 'static) -> &Self {

let start = self.start.clone();

let end = self.end.clone();

let slab = self.slab.clone();

thread::spawn(move || {

f(&*start, &*slab);

end.wait();

});

self

}

fn run(&self) -> Duration {

self.start.wait();

let t0 = Instant::now();

self.end.wait();

t0.elapsed()

}

}

const N_INSERTIONS: &[usize] = &[100, 300, 500, 700, 1000, 3000, 5000];

fn insert_remove_local(c: &mut Criterion) {

// the 10000-insertion benchmark takes the `slab` crate about an hour to

// run; don't run this unless you're prepared for that...

// const N_INSERTIONS: &'static [usize] = &[100, 500, 1000, 5000, 10000];

let mut group = c.benchmark_group("insert_remove_local");

let g = group.measurement_time(Duration::from_secs(15));

for i in N_INSERTIONS {

g.bench_with_input(BenchmarkId::new("sharded_slab", i), i, |b, &i| {

b.iter_custom(|iters| {

let mut total = Duration::from_secs(0);

for _ in 0..iters {

let bench = MultithreadedBench::new(Arc::new(sharded_slab::Slab::new()));

let elapsed = bench

.thread(move |start, slab| {

start.wait();

let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

for i in v {

slab.remove(i);

}

})

.thread(move |start, slab| {

start.wait();

let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

for i in v {

slab.remove(i);

}

})

.thread(move |start, slab| {

start.wait();

let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

for i in v {

slab.remove(i);

}

})

.thread(move |start, slab| {

start.wait();

let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

for i in v {

slab.remove(i);

}

})

.run();

total += elapsed;

}

total

})

});

g.bench_with_input(BenchmarkId::new("slab_biglock", i), i, |b, &i| {

b.iter_custom(|iters| {

let mut total = Duration::from_secs(0);

let i = i;

for _ in 0..iters {

let bench = MultithreadedBench::new(Arc::new(RwLock::new(slab::Slab::new())));

let elapsed = bench

.thread(move |start, slab| {

start.wait();

let v: Vec<_> =

(0..i).map(|i| slab.write().unwrap().insert(i)).collect();

for i in v {

slab.write().unwrap().remove(i);

}

})

.thread(move |start, slab| {

start.wait();

let v: Vec<_> =

(0..i).map(|i| slab.write().unwrap().insert(i)).collect();

for i in v {

slab.write().unwrap().remove(i);

}

})

.thread(move |start, slab| {

start.wait();

let v: Vec<_> =

(0..i).map(|i| slab.write().unwrap().insert(i)).collect();

for i in v {

slab.write().unwrap().remove(i);

}

})

.thread(move |start, slab| {

start.wait();

let v: Vec<_> =

(0..i).map(|i| slab.write().unwrap().insert(i)).collect();

for i in v {

slab.write().unwrap().remove(i);

}

})

.run();

total += elapsed;

}

total

})

});

}

group.finish();

}

fn insert_remove_single_thread(c: &mut Criterion) {

// the 10000-insertion benchmark takes the `slab` crate about an hour to

// run; don't run this unless you're prepared for that...

// const N_INSERTIONS: &'static [usize] = &[100, 500, 1000, 5000, 10000];

let mut group = c.benchmark_group("insert_remove_single_threaded");

for i in N_INSERTIONS {

group.bench_with_input(BenchmarkId::new("sharded_slab", i), i, |b, &i| {

let slab = sharded_slab::Slab::new();

b.iter(|| {

let v: Vec<_> = (0..i).map(|i| slab.insert(i).unwrap()).collect();

for i in v {

slab.remove(i);

}

});

});

group.bench_with_input(BenchmarkId::new("slab_no_lock", i), i, |b, &i| {

let mut slab = slab::Slab::new();

b.iter(|| {

let v: Vec<_> = (0..i).map(|i| slab.insert(i)).collect();

for i in v {

slab.remove(i);

}

});

});

group.bench_with_input(BenchmarkId::new("slab_uncontended", i), i, |b, &i| {

let slab = RwLock::new(slab::Slab::new());

b.iter(|| {

let v: Vec<_> = (0..i).map(|i| slab.write().unwrap().insert(i)).collect();

for i in v {

slab.write().unwrap().remove(i);

}

});

});

}

group.finish();

}

criterion_group!(benches, insert_remove_local, insert_remove_single_thread);

criterion_main!(benches);

������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/bin/loom.sh����������������������������������������������������������������������0000755�0000000�0000000�00000001011�00726746425�0014255�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������#!/usr/bin/env bash

# Runs Loom tests with defaults for Loom's configuration values.

#

# The tests are compiled in release mode to improve performance, but debug

# assertions are enabled.

#

# Any arguments to this script are passed to the `cargo test` invocation.

RUSTFLAGS="${RUSTFLAGS} --cfg loom -C debug-assertions=on" \

LOOM_MAX_PREEMPTIONS="${LOOM_MAX_PREEMPTIONS:-2}" \

LOOM_CHECKPOINT_INTERVAL="${LOOM_CHECKPOINT_INTERVAL:-1}" \

LOOM_LOG=1 \

LOOM_LOCATION=1 \

cargo test --release --lib "$@"

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/src/cfg.rs�����������������������������������������������������������������������0000644�0000000�0000000�00000015341�00726746425�0014107�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������use crate::page::{

slot::{Generation, RefCount},

Addr,

};

use crate::Pack;

use std::{fmt, marker::PhantomData};

/// Configuration parameters which can be overridden to tune the behavior of a slab.

pub trait Config: Sized {

/// The maximum number of threads which can access the slab.

///

/// This value (rounded to a power of two) determines the number of shards

/// in the slab. If a thread is created, accesses the slab, and then terminates,

/// its shard may be reused and thus does not count against the maximum

/// number of threads once the thread has terminated.

const MAX_THREADS: usize = DefaultConfig::MAX_THREADS;

/// The maximum number of pages in each shard in the slab.

///

/// This value, in combination with `INITIAL_PAGE_SIZE`, determines how many

/// bits of each index are used to represent page addresses.

const MAX_PAGES: usize = DefaultConfig::MAX_PAGES;

/// The size of the first page in each shard.

///

/// When a page in a shard has been filled with values, a new page

/// will be allocated that is twice as large as the previous page. Thus, the

/// second page will be twice this size, and the third will be four times

/// this size, and so on.

///

/// Note that page sizes must be powers of two. If this value is not a power

/// of two, it will be rounded to the next power of two.

const INITIAL_PAGE_SIZE: usize = DefaultConfig::INITIAL_PAGE_SIZE;

/// Sets a number of high-order bits in each index which are reserved from

/// user code.

///

/// Note that these bits are taken from the generation counter; if the page

/// address and thread IDs are configured to use a large number of bits,

/// reserving additional bits will decrease the period of the generation

/// counter. These should thus be used relatively sparingly, to ensure that

/// generation counters are able to effectively prevent the ABA problem.

const RESERVED_BITS: usize = 0;

}

pub(crate) trait CfgPrivate: Config {

const USED_BITS: usize = Generation::::LEN + Generation::::SHIFT;

const INITIAL_SZ: usize = next_pow2(Self::INITIAL_PAGE_SIZE);

const MAX_SHARDS: usize = next_pow2(Self::MAX_THREADS - 1);

const ADDR_INDEX_SHIFT: usize = Self::INITIAL_SZ.trailing_zeros() as usize + 1;

fn page_size(n: usize) -> usize {

Self::INITIAL_SZ * 2usize.pow(n as _)

}

fn debug() -> DebugConfig {

DebugConfig { _cfg: PhantomData }

}

fn validate() {

assert!(

Self::INITIAL_SZ.is_power_of_two(),

"invalid Config: {:#?}",

Self::debug(),

);

assert!(

Self::INITIAL_SZ <= Addr::::BITS,

"invalid Config: {:#?}",

Self::debug()

);

assert!(

Generation::::BITS >= 3,

"invalid Config: {:#?}\ngeneration counter should be at least 3 bits!",

Self::debug()

);

assert!(

Self::USED_BITS <= WIDTH,

"invalid Config: {:#?}\ntotal number of bits per index is too large to fit in a word!",

Self::debug()

);

assert!(

WIDTH - Self::USED_BITS >= Self::RESERVED_BITS,

"invalid Config: {:#?}\nindices are too large to fit reserved bits!",

Self::debug()

);

assert!(

RefCount::::MAX > 1,

"invalid config: {:#?}\n maximum concurrent references would be {}",

Self::debug(),

RefCount::::MAX,

);

}

#[inline(always)]

fn unpack>(packed: usize) -> A {

A::from_packed(packed)

}

#[inline(always)]

fn unpack_addr(packed: usize) -> Addr {

Self::unpack(packed)

}

#[inline(always)]

fn unpack_tid(packed: usize) -> crate::Tid {

Self::unpack(packed)

}

#[inline(always)]

fn unpack_gen(packed: usize) -> Generation {

Self::unpack(packed)

}

}

impl CfgPrivate for C {}

/// Default slab configuration values.

#[derive(Copy, Clone)]

pub struct DefaultConfig {

_p: (),

}

pub(crate) struct DebugConfig {

_cfg: PhantomData,

}

pub(crate) const WIDTH: usize = std::mem::size_of::() * 8;

pub(crate) const fn next_pow2(n: usize) -> usize {

let pow2 = n.count_ones() == 1;

let zeros = n.leading_zeros();

1 << (WIDTH - zeros as usize - pow2 as usize)

}

// === impl DefaultConfig ===

impl Config for DefaultConfig {

const INITIAL_PAGE_SIZE: usize = 32;

#[cfg(target_pointer_width = "64")]

const MAX_THREADS: usize = 4096;

#[cfg(target_pointer_width = "32")]

// TODO(eliza): can we find enough bits to give 32-bit platforms more threads?

const MAX_THREADS: usize = 128;

const MAX_PAGES: usize = WIDTH / 2;

}

impl fmt::Debug for DefaultConfig {

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

Self::debug().fmt(f)

}

}

impl fmt::Debug for DebugConfig {

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

f.debug_struct(std::any::type_name::())

.field("initial_page_size", &C::INITIAL_SZ)

.field("max_shards", &C::MAX_SHARDS)

.field("max_pages", &C::MAX_PAGES)

.field("used_bits", &C::USED_BITS)

.field("reserved_bits", &C::RESERVED_BITS)

.field("pointer_width", &WIDTH)

.field("max_concurrent_references", &RefCount::::MAX)

.finish()

}

}

#[cfg(test)]

mod tests {

use super::*;

use crate::test_util;

use crate::Slab;

#[test]

#[cfg_attr(loom, ignore)]

#[should_panic]

fn validates_max_refs() {

struct GiantGenConfig;

// Configure the slab with a very large number of bits for the generation

// counter. This will only leave 1 bit to use for the slot reference

// counter, which will fail to validate.

impl Config for GiantGenConfig {

const INITIAL_PAGE_SIZE: usize = 1;

const MAX_THREADS: usize = 1;

const MAX_PAGES: usize = 1;

}

let _slab = Slab::::new_with_config::();

}

#[test]

#[cfg_attr(loom, ignore)]

fn big() {

let slab = Slab::new();

for i in 0..10000 {

println!("{:?}", i);

let k = slab.insert(i).expect("insert");

assert_eq!(slab.get(k).expect("get"), i);

}

}

#[test]

#[cfg_attr(loom, ignore)]

fn custom_page_sz() {

let slab = Slab::new_with_config::();

for i in 0..4096 {

println!("{}", i);

let k = slab.insert(i).expect("insert");

assert_eq!(slab.get(k).expect("get"), i);

}

}

}

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/src/clear.rs���������������������������������������������������������������������0000644�0000000�0000000�00000005231�00726746425�0014433�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������use std::{collections, hash, ops::DerefMut, sync};

/// Trait implemented by types which can be cleared in place, retaining any

/// allocated memory.

///

/// This is essentially a generalization of methods on standard library

/// collection types, including as [`Vec::clear`], [`String::clear`], and

/// [`HashMap::clear`]. These methods drop all data stored in the collection,

/// but retain the collection's heap allocation for future use. Types such as

/// `BTreeMap`, whose `clear` methods drops allocations, should not

/// implement this trait.

///

/// When implemented for types which do not own a heap allocation, `Clear`

/// should reset the type in place if possible. If the type has an empty state

/// or stores `Option`s, those values should be reset to the empty state. For

/// "plain old data" types, which hold no pointers to other data and do not have

/// an empty or initial state, it's okay for a `Clear` implementation to be a

/// no-op. In that case, it essentially serves as a marker indicating that the

/// type may be reused to store new data.

///

/// [`Vec::clear`]: https://doc.rust-lang.org/stable/std/vec/struct.Vec.html#method.clear

/// [`String::clear`]: https://doc.rust-lang.org/stable/std/string/struct.String.html#method.clear

/// [`HashMap::clear`]: https://doc.rust-lang.org/stable/std/collections/struct.HashMap.html#method.clear

pub trait Clear {

/// Clear all data in `self`, retaining the allocated capacithy.

fn clear(&mut self);

}

impl Clear for Option {

fn clear(&mut self) {

let _ = self.take();

}

}

impl Clear for Box

where

T: Clear,

{

#[inline]

fn clear(&mut self) {

self.deref_mut().clear()

}

}

impl Clear for Vec {

#[inline]

fn clear(&mut self) {

Vec::clear(self)

}

}

impl Clear for collections::HashMap

where

K: hash::Hash + Eq,

S: hash::BuildHasher,

{

#[inline]

fn clear(&mut self) {

collections::HashMap::clear(self)

}

}

impl Clear for collections::HashSet

where

T: hash::Hash + Eq,

S: hash::BuildHasher,

{

#[inline]

fn clear(&mut self) {

collections::HashSet::clear(self)

}

}

impl Clear for String {

#[inline]

fn clear(&mut self) {

String::clear(self)

}

}

impl Clear for sync::Mutex {

#[inline]

fn clear(&mut self) {

self.get_mut().unwrap().clear();

}

}

impl Clear for sync::RwLock {

#[inline]

fn clear(&mut self) {

self.write().unwrap().clear();

}

}

#[cfg(all(loom, test))]

impl Clear for crate::sync::alloc::Track {

fn clear(&mut self) {

self.get_mut().clear()

}

}

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/src/implementation.rs������������������������������������������������������������0000644�0000000�0000000�00000020041�00726746425�0016366�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������// This module exists only to provide a separate page for the implementation

// documentation.

//! Notes on `sharded-slab`'s implementation and design.

//!

//! # Design

//!

//! The sharded slab's design is strongly inspired by the ideas presented by

//! Leijen, Zorn, and de Moura in [Mimalloc: Free List Sharding in

//! Action][mimalloc]. In this report, the authors present a novel design for a

//! memory allocator based on a concept of _free list sharding_.

//!

//! Memory allocators must keep track of what memory regions are not currently

//! allocated ("free") in order to provide them to future allocation requests.

//! The term [_free list_][freelist] refers to a technique for performing this

//! bookkeeping, where each free block stores a pointer to the next free block,

//! forming a linked list. The memory allocator keeps a pointer to the most

//! recently freed block, the _head_ of the free list. To allocate more memory,

//! the allocator pops from the free list by setting the head pointer to the

//! next free block of the current head block, and returning the previous head.

//! To deallocate a block, the block is pushed to the free list by setting its

//! first word to the current head pointer, and the head pointer is set to point

//! to the deallocated block. Most implementations of slab allocators backed by

//! arrays or vectors use a similar technique, where pointers are replaced by

//! indices into the backing array.

//!

//! When allocations and deallocations can occur concurrently across threads,

//! they must synchronize accesses to the free list; either by putting the

//! entire allocator state inside of a lock, or by using atomic operations to

//! treat the free list as a lock-free structure (such as a [Treiber stack]). In

//! both cases, there is a significant performance cost — even when the free

//! list is lock-free, it is likely that a noticeable amount of time will be

//! spent in compare-and-swap loops. Ideally, the global synchronzation point

//! created by the single global free list could be avoided as much as possible.

//!

//! The approach presented by Leijen, Zorn, and de Moura is to introduce

//! sharding and thus increase the granularity of synchronization significantly.

//! In mimalloc, the heap is _sharded_ so that each thread has its own

//! thread-local heap. Objects are always allocated from the local heap of the

//! thread where the allocation is performed. Because allocations are always

//! done from a thread's local heap, they need not be synchronized.

//!

//! However, since objects can move between threads before being deallocated,

//! _deallocations_ may still occur concurrently. Therefore, Leijen et al.

//! introduce a concept of _local_ and _global_ free lists. When an object is

//! deallocated on the same thread it was originally allocated on, it is placed

//! on the local free list; if it is deallocated on another thread, it goes on

//! the global free list for the heap of the thread from which it originated. To

//! allocate, the local free list is used first; if it is empty, the entire

//! global free list is popped onto the local free list. Since the local free

//! list is only ever accessed by the thread it belongs to, it does not require

//! synchronization at all, and because the global free list is popped from

//! infrequently, the cost of synchronization has a reduced impact. A majority

//! of allocations can occur without any synchronization at all; and

//! deallocations only require synchronization when an object has left its

//! parent thread (a relatively uncommon case).

//!

//! [mimalloc]: https://www.microsoft.com/en-us/research/uploads/prod/2019/06/mimalloc-tr-v1.pdf

//! [freelist]: https://en.wikipedia.org/wiki/Free_list

//! [Treiber stack]: https://en.wikipedia.org/wiki/Treiber_stack

//!

//! # Implementation

//!

//! A slab is represented as an array of [`MAX_THREADS`] _shards_. A shard

//! consists of a vector of one or more _pages_ plus associated metadata.

//! Finally, a page consists of an array of _slots_, head indices for the local

//! and remote free lists.

//!

//! ```text

//! ┌─────────────┐

//! │ shard 1 │

//! │ │ ┌─────────────┐ ┌────────┐

//! │ pages───────┼───▶│ page 1 │ │ │

//! ├─────────────┤ ├─────────────┤ ┌────▶│ next──┼─┐

//! │ shard 2 │ │ page 2 │ │ ├────────┤ │

//! ├─────────────┤ │ │ │ │XXXXXXXX│ │

//! │ shard 3 │ │ local_head──┼──┘ ├────────┤ │

//! └─────────────┘ │ remote_head─┼──┐ │ │◀┘

//! ... ├─────────────┤ │ │ next──┼─┐

//! ┌─────────────┐ │ page 3 │ │ ├────────┤ │

//! │ shard n │ └─────────────┘ │ │XXXXXXXX│ │

//! └─────────────┘ ... │ ├────────┤ │

//! ┌─────────────┐ │ │XXXXXXXX│ │

//! │ page n │ │ ├────────┤ │

//! └─────────────┘ │ │ │◀┘

//! └────▶│ next──┼───▶ ...

//! ├────────┤

//! │XXXXXXXX│

//! └────────┘

//! ```

//!

//!

//! The size of the first page in a shard is always a power of two, and every

//! subsequent page added after the first is twice as large as the page that

//! preceeds it.

//!

//! ```text

//!

//! pg.

//! ┌───┐ ┌─┬─┐

//! │ 0 │───▶ │ │

//! ├───┤ ├─┼─┼─┬─┐

//! │ 1 │───▶ │ │ │ │

//! ├───┤ ├─┼─┼─┼─┼─┬─┬─┬─┐

//! │ 2 │───▶ │ │ │ │ │ │ │ │

//! ├───┤ ├─┼─┼─┼─┼─┼─┼─┼─┼─┬─┬─┬─┬─┬─┬─┬─┐

//! │ 3 │───▶ │ │ │ │ │ │ │ │ │ │ │ │ │ │ │ │

//! └───┘ └─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┴─┘

//! ```

//!

//! When searching for a free slot, the smallest page is searched first, and if

//! it is full, the search proceeds to the next page until either a free slot is

//! found or all available pages have been searched. If all available pages have

//! been searched and the maximum number of pages has not yet been reached, a

//! new page is then allocated.

//!

//! Since every page is twice as large as the previous page, and all page sizes

//! are powers of two, we can determine the page index that contains a given

//! address by shifting the address down by the smallest page size and

//! looking at how many twos places necessary to represent that number,

//! telling us what power of two page size it fits inside of. We can

//! determine the number of twos places by counting the number of leading

//! zeros (unused twos places) in the number's binary representation, and

//! subtracting that count from the total number of bits in a word.

//!

//! The formula for determining the page number that contains an offset is thus:

//!

//! ```rust,ignore

//! WIDTH - ((offset + INITIAL_PAGE_SIZE) >> INDEX_SHIFT).leading_zeros()

//! ```

//!

//! where `WIDTH` is the number of bits in a `usize`, and `INDEX_SHIFT` is

//!

//! ```rust,ignore

//! INITIAL_PAGE_SIZE.trailing_zeros() + 1;

//! ```

//!

//! [`MAX_THREADS`]: https://docs.rs/sharded-slab/latest/sharded_slab/trait.Config.html#associatedconstant.MAX_THREADS

�����������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/src/iter.rs����������������������������������������������������������������������0000644�0000000�0000000�00000002417�00726746425�0014313�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������use crate::{page, shard};

use std::slice;

#[derive(Debug)]

pub struct UniqueIter<'a, T, C: crate::cfg::Config> {

pub(super) shards: shard::IterMut<'a, Option, C>,

pub(super) pages: slice::Iter<'a, page::Shared, C>>,

pub(super) slots: Option>,

}

impl<'a, T, C: crate::cfg::Config> Iterator for UniqueIter<'a, T, C> {

type Item = &'a T;

fn next(&mut self) -> Option {

test_println!("UniqueIter::next");

loop {

test_println!("-> try next slot");

if let Some(item) = self.slots.as_mut().and_then(|slots| slots.next()) {

test_println!("-> found an item!");

return Some(item);

}

test_println!("-> try next page");

if let Some(page) = self.pages.next() {

test_println!("-> found another page");

self.slots = page.iter();

continue;

}

test_println!("-> try next shard");

if let Some(shard) = self.shards.next() {

test_println!("-> found another shard");

self.pages = shard.iter();

} else {

test_println!("-> all done!");

return None;

}

}

}

}

�������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/src/lib.rs�����������������������������������������������������������������������0000644�0000000�0000000�00000106601�00726746425�0014116�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������//! A lock-free concurrent slab.

//!

//! Slabs provide pre-allocated storage for many instances of a single data

//! type. When a large number of values of a single type are required,

//! this can be more efficient than allocating each item individually. Since the

//! allocated items are the same size, memory fragmentation is reduced, and

//! creating and removing new items can be very cheap.

//!

//! This crate implements a lock-free concurrent slab, indexed by `usize`s.

//!

//! ## Usage

//!

//! First, add this to your `Cargo.toml`:

//!

//! ```toml

//! sharded-slab = "0.1.1"

//! ```

//!

//! This crate provides two types, [`Slab`] and [`Pool`], which provide

//! slightly different APIs for using a sharded slab.

//!

//! [`Slab`] implements a slab for _storing_ small types, sharing them between

//! threads, and accessing them by index. New entries are allocated by

//! [inserting] data, moving it in by value. Similarly, entries may be

//! deallocated by [taking] from the slab, moving the value out. This API is

//! similar to a `Vec>`, but allowing lock-free concurrent insertion

//! and removal.

//!

//! In contrast, the [`Pool`] type provides an [object pool] style API for

//! _reusing storage_. Rather than constructing values and moving them into the

//! pool, as with [`Slab`], [allocating an entry][create] from the pool takes a

//! closure that's provided with a mutable reference to initialize the entry in

//! place. When entries are deallocated, they are [cleared] in place. Types

//! which own a heap allocation can be cleared by dropping any _data_ they

//! store, but retaining any previously-allocated capacity. This means that a

//! [`Pool`] may be used to reuse a set of existing heap allocations, reducing

//! allocator load.

//!

//! [inserting]: Slab::insert

//! [taking]: Slab::take

//! [create]: Pool::create

//! [cleared]: Clear

//! [object pool]: https://en.wikipedia.org/wiki/Object_pool_pattern

//!

//! # Examples

//!

//! Inserting an item into the slab, returning an index:

//! ```rust

//! # use sharded_slab::Slab;

//! let slab = Slab::new();

//!

//! let key = slab.insert("hello world").unwrap();

//! assert_eq!(slab.get(key).unwrap(), "hello world");

//! ```

//!

//! To share a slab across threads, it may be wrapped in an `Arc`:

//! ```rust

//! # use sharded_slab::Slab;

//! use std::sync::Arc;

//! let slab = Arc::new(Slab::new());

//!

//! let slab2 = slab.clone();

//! let thread2 = std::thread::spawn(move || {

//! let key = slab2.insert("hello from thread two").unwrap();

//! assert_eq!(slab2.get(key).unwrap(), "hello from thread two");

//! key

//! });

//!

//! let key1 = slab.insert("hello from thread one").unwrap();

//! assert_eq!(slab.get(key1).unwrap(), "hello from thread one");

//!

//! // Wait for thread 2 to complete.

//! let key2 = thread2.join().unwrap();

//!

//! // The item inserted by thread 2 remains in the slab.

//! assert_eq!(slab.get(key2).unwrap(), "hello from thread two");

//!```

//!

//! If items in the slab must be mutated, a `Mutex` or `RwLock` may be used for

//! each item, providing granular locking of items rather than of the slab:

//!

//! ```rust

//! # use sharded_slab::Slab;

//! use std::sync::{Arc, Mutex};

//! let slab = Arc::new(Slab::new());

//!

//! let key = slab.insert(Mutex::new(String::from("hello world"))).unwrap();

//!

//! let slab2 = slab.clone();

//! let thread2 = std::thread::spawn(move || {

//! let hello = slab2.get(key).expect("item missing");

//! let mut hello = hello.lock().expect("mutex poisoned");

//! *hello = String::from("hello everyone!");

//! });

//!

//! thread2.join().unwrap();

//!

//! let hello = slab.get(key).expect("item missing");

//! let mut hello = hello.lock().expect("mutex poisoned");

//! assert_eq!(hello.as_str(), "hello everyone!");

//! ```

//!

//! # Configuration

//!

//! For performance reasons, several values used by the slab are calculated as

//! constants. In order to allow users to tune the slab's parameters, we provide

//! a [`Config`] trait which defines these parameters as associated `consts`.

//! The `Slab` type is generic over a `C: Config` parameter.

//!

//! [`Config`]: trait.Config.html

//!

//! # Comparison with Similar Crates

//!

//! - [`slab`]: Carl Lerche's `slab` crate provides a slab implementation with a

//! similar API, implemented by storing all data in a single vector.

//!

//! Unlike `sharded_slab`, inserting and removing elements from the slab

//! requires mutable access. This means that if the slab is accessed

//! concurrently by multiple threads, it is necessary for it to be protected

//! by a `Mutex` or `RwLock`. Items may not be inserted or removed (or

//! accessed, if a `Mutex` is used) concurrently, even when they are

//! unrelated. In many cases, the lock can become a significant bottleneck. On

//! the other hand, this crate allows separate indices in the slab to be

//! accessed, inserted, and removed concurrently without requiring a global

//! lock. Therefore, when the slab is shared across multiple threads, this

//! crate offers significantly better performance than `slab`.

//!

//! However, the lock free slab introduces some additional constant-factor

//! overhead. This means that in use-cases where a slab is _not_ shared by

//! multiple threads and locking is not required, this crate will likely offer

//! slightly worse performance.

//!

//! In summary: `sharded-slab` offers significantly improved performance in

//! concurrent use-cases, while `slab` should be preferred in single-threaded

//! use-cases.

//!

//! [`slab`]: https://crates.io/crates/loom

//!

//! # Safety and Correctness

//!

//! Most implementations of lock-free data structures in Rust require some

//! amount of unsafe code, and this crate is not an exception. In order to catch

//! potential bugs in this unsafe code, we make use of [`loom`], a

//! permutation-testing tool for concurrent Rust programs. All `unsafe` blocks

//! this crate occur in accesses to `loom` `UnsafeCell`s. This means that when

//! those accesses occur in this crate's tests, `loom` will assert that they are

//! valid under the C11 memory model across multiple permutations of concurrent

//! executions of those tests.

//!

//! In order to guard against the [ABA problem][aba], this crate makes use of

//! _generational indices_. Each slot in the slab tracks a generation counter

//! which is incremented every time a value is inserted into that slot, and the

//! indices returned by [`Slab::insert`] include the generation of the slot when

//! the value was inserted, packed into the high-order bits of the index. This

//! ensures that if a value is inserted, removed, and a new value is inserted

//! into the same slot in the slab, the key returned by the first call to

//! `insert` will not map to the new value.

//!

//! Since a fixed number of bits are set aside to use for storing the generation

//! counter, the counter will wrap around after being incremented a number of

//! times. To avoid situations where a returned index lives long enough to see the

//! generation counter wrap around to the same value, it is good to be fairly

//! generous when configuring the allocation of index bits.

//!

//! [`loom`]: https://crates.io/crates/loom

//! [aba]: https://en.wikipedia.org/wiki/ABA_problem

//! [`Slab::insert`]: struct.Slab.html#method.insert

//!

//! # Performance

//!

//! These graphs were produced by [benchmarks] of the sharded slab implementation,

//! using the [`criterion`] crate.

//!

//! The first shows the results of a benchmark where an increasing number of

//! items are inserted and then removed into a slab concurrently by five

//! threads. It compares the performance of the sharded slab implementation

//! with a `RwLock`:

//!

//!

These benchmarks demonstrate that, while the sharded approach introduces

a small constant-factor overhead, it offers significantly better

performance across concurrent accesses.

[benchmarks]: https://github.com/hawkw/sharded-slab/blob/master/benches/bench.rs

[`criterion`]: https://crates.io/crates/criterion

## License

This project is licensed under the [MIT license](LICENSE).

### Contribution

Unless you explicitly state otherwise, any contribution intentionally submitted

for inclusion in this project by you, shall be licensed as MIT, without any

additional terms or conditions.

��������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������������sharded-slab-0.1.4/benches/bench.rs�����������������������������������������������������������������0000644�0000000�0000000�00000015456�00726746425�0015256�0����������������������������������������������������������������������������������������������������ustar �����������������������������������������������������������������0000000�0000000������������������������������������������������������������������������������������������������������������������������������������������������������������������������use criterion::{criterion_group, criterion_main, BenchmarkId, Criterion};

use std::{

sync::{Arc, Barrier, RwLock},

thread,

time::{Duration, Instant},

};

#[derive(Clone)]

struct MultithreadedBench {

start: Arc,

end: Arc,

slab: Arc,

}

impl MultithreadedBench {

fn new(slab: Arc) -> Self {

Self {

start: Arc::new(Barrier::new(5)),

end: Arc::new(Barrier::new(5)),

slab,

}

}

fn thread(&self, f: impl FnOnce(&Barrier, &T) + Send + 'static) -> &Self {

let start = self.start.clone();

let end = self.end.clone();

let slab = self.slab.clone();

thread::spawn(move || {

f(&*start, &*slab);

end.wait();

});

self

}

fn run(&self) -> Duration {

self.start.wait();

let t0 = Instant::now();

self.end.wait();

t0.elapsed()

}

}

const N_INSERTIONS: &[usize] = &[100, 300, 500, 700, 1000, 3000, 5000];

fn insert_remove_local(c: &mut Criterion) {